Key Takeaways

AI test case generation reduces manual testing effort significantly while expanding test coverage and catching edge cases humans overlook.

- Organizations using AI-powered testing report up to 60% faster test creation times and measurable improvements in early defect detection

- Effective AI test generation requires quality input data, clear requirements, and consistent human oversight

- Teams achieve the best results when AI handles repetitive scenario generation while testers focus on strategic validation and edge case refinement

- Start with a pilot project targeting high-volume, repetitive test areas before scaling AI testing across your workflow

The shift from manual to AI-assisted test case creation is inevitable for teams serious about keeping pace with modern development cycles.

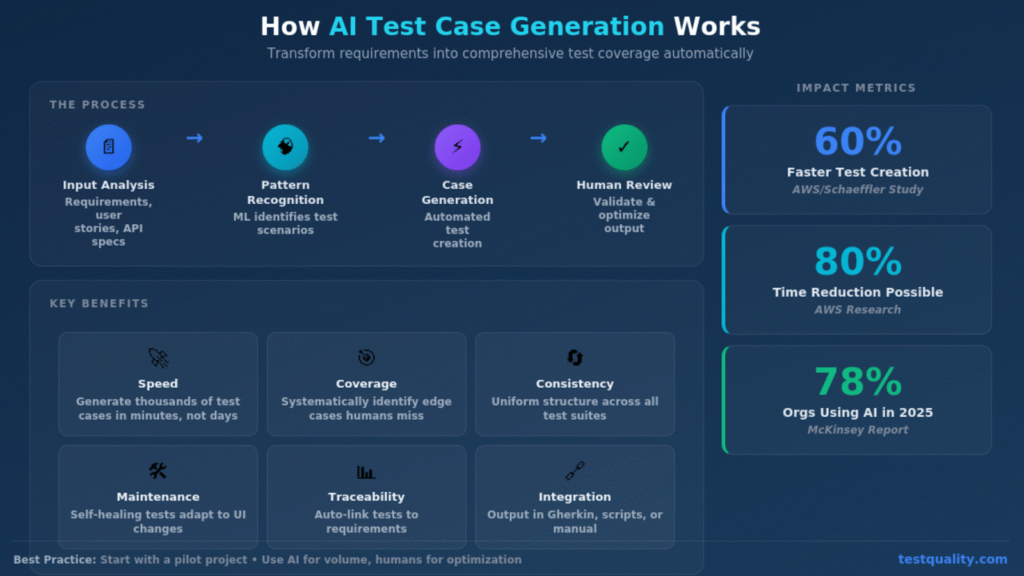

Software testing remains one of the most time-intensive phases of the development lifecycle. According to McKinsey's 2025 State of AI report, 78% of organizations now use AI in at least one business function, and QA teams are increasingly recognizing the potential of AI to transform how they approach test case management. The manual process of writing test cases from requirements, covering edge cases, and maintaining test suites as features evolve demands significant time and expertise. AI test case generation offers a compelling alternative, automating repetitive work while freeing testers to focus on strategic quality initiatives.

But automating test creation isn't simply about speed. The real value emerges when AI helps teams achieve comprehensive coverage they couldn't reach manually, identify scenarios that would slip through traditional approaches, and maintain test quality as applications scale. This guide explores how AI test case generation works, when it makes sense to adopt, and the best practices that separate successful implementations from disappointing experiments.

What Is AI Test Case Generation?

AI test case generation uses machine learning algorithms and large language models to automatically create test scenarios from various inputs. These systems analyze requirements documents, user stories, API specifications, existing test suites, and historical defect data to produce relevant test cases that cover both standard workflows and edge conditions.

The technology goes beyond simple template generation. Modern AI testing tools understand context, recognize patterns in how features should behave, and generate test steps that reflect realistic user interactions. They output test cases in formats compatible with manual execution or automation frameworks, including plain text descriptions, Gherkin syntax for BDD workflows, and executable scripts for popular testing tools.

What distinguishes AI-generated tests from traditional automation is adaptability. When application features change, AI systems can adjust generated test cases to reflect new functionality rather than requiring complete rewrites. This capability addresses one of the most persistent pain points in test automation: the maintenance burden that grows alongside application complexity.

How Does AI Generate Test Cases?

The process of AI test case generation involves several distinct stages that mirror how experienced testers approach test design, accelerated through machine learning capabilities.

Input Analysis and Understanding

AI systems begin by ingesting available documentation about the feature or system under test. This includes functional requirements, acceptance criteria, user stories, technical specifications, and sometimes the codebase itself. Natural language processing enables the AI to extract testable conditions from written requirements, identifying what the system should do under various circumstances.

The quality of input directly impacts output quality. Well-documented requirements with clear acceptance criteria produce more accurate and comprehensive test cases. Teams with sparse documentation often need to supplement AI-generated tests with manual review and additions.

Pattern Recognition and Scenario Generation

Once the AI understands what needs testing, it applies pattern recognition to generate scenarios. Machine learning models trained on large datasets of test cases recognize common testing patterns for specific functionality types. A login feature, for example, triggers generation of positive authentication tests, invalid credential handling, session management scenarios, and security-related edge cases.

The AI identifies boundary conditions and generates tests for values at the edges of valid ranges. It recognizes scenarios that historically correlate with defects and prioritizes coverage for high-risk areas. This systematic approach catches edge cases that manual test design might overlook due to time constraints or assumptions.

Output Generation and Formatting

Generated test cases are formatted according to team preferences and tooling requirements. AI can produce step-by-step manual test instructions, Gherkin scenarios for Cucumber or similar BDD frameworks, or parameterized test templates ready for automation. Some advanced tools generate executable test scripts directly, though these typically require human review before deployment.

The output includes preconditions, test steps, expected results, and often test data suggestions. Well-designed AI tools provide traceability back to source requirements, helping teams maintain the connection between tests and business needs.

What Are the Core Benefits of AI Test Case Generation?

Organizations adopting AI test case generation report measurable improvements across several dimensions of testing effectiveness.

Dramatic Time Savings

The most immediately visible benefit is reduced test creation time. Research from AWS and Schaeffler documented a 60% acceleration in test case generation, reducing average time per test case from approximately one hour to nineteen minutes. Their extended research showed test creation time reductions up to 80% when AI was fully integrated into established workflows.

These time savings compound across large test suites. A system with 500 requirements that previously required 20+ person-days for test case creation can potentially be covered in a fraction of that time with AI assistance. Teams redirect saved effort toward exploratory testing, test optimization, and addressing technical debt.

Expanded Test Coverage

AI systematically identifies scenarios that human testers might deprioritize or overlook. The technology excels at generating comprehensive boundary value tests, negative test cases, and combinations of input parameters that create combinatorial explosion when approached manually. According to the World Quality Report from Capgemini, organizations implementing AI-enhanced testing approaches consistently report significant improvements in defect detection rates compared to purely manual methods.

Coverage expansion proves particularly valuable for complex systems with numerous integration points. AI can generate tests for interaction patterns between components that would require extensive effort to identify manually.

Improved Consistency and Accuracy

Human-written test cases vary in quality based on tester experience, time pressure, and familiarity with the feature being tested. AI-generated tests maintain consistent structure, detail level, and format across the entire test suite. This consistency simplifies test execution and results analysis while reducing ambiguity that can lead to inconsistent test outcomes.

The accuracy of AI-generated tests depends heavily on input quality, but when provided with clear requirements, AI tools produce test cases with fewer gaps and oversights than rushed manual creation.

Reduced Maintenance Burden

Traditional automated test suites often suffer from high maintenance costs as applications evolve. AI-powered testing tools increasingly offer self-healing capabilities that automatically adjust test selectors and steps when UI elements change. This reduces the manual effort required to keep automated tests functional after application updates.

What Challenges Should Teams Anticipate?

AI test case generation isn't a magic solution. Teams encounter predictable challenges that require thoughtful mitigation.

Data Quality Dependencies

AI effectiveness correlates directly with input quality. Poorly documented requirements, vague user stories, and inconsistent specifications produce correspondingly weak test cases. Organizations with documentation debt often need to invest in improving their requirements before realizing full AI testing benefits.

Historical test data and defect logs improve AI accuracy over time, but teams starting fresh may not have sufficient training data for optimal results.

Skill Gap and Learning Curve

Implementing AI testing tools requires new competencies. Teams need members who understand how to prompt AI effectively, evaluate generated output, and integrate AI tools with existing test management and automation infrastructure. Gartner predicts that 80% of engineers will need AI upskilling by 2027, and testing teams face similar requirements.

Over-Reliance Risks

AI-generated tests require human validation before production use. Teams that blindly trust AI output risk deploying incomplete or irrelevant tests. The technology generates test cases that "look right" but may miss critical business logic nuances that only domain experts would catch.

Effective implementations maintain human testers in strategic oversight roles, reviewing AI output, adding specialized tests for complex scenarios, and ensuring generated tests align with actual quality objectives.

Initial Investment Requirements

Quality AI testing tools represent meaningful investment in licensing, integration effort, and training. Organizations must weigh upfront costs against expected productivity gains and make realistic assessments of time to value.

What Best Practices Lead to AI Test Case Generation Success?

Teams achieving the strongest results follow consistent patterns in their AI testing implementations.

- Start with a focused pilot project rather than attempting organization-wide deployment. Select a well-documented feature area with clear requirements where AI can demonstrate measurable value quickly.

- Establish clear human review processes for all AI-generated tests before they enter production test suites. Define quality criteria and assign experienced testers to validate AI output.

- Invest in requirements quality as a prerequisite for AI testing success. Improve acceptance criteria clarity, document edge cases explicitly, and maintain updated specifications.

- Integrate AI generation with existing test management workflows rather than treating it as a separate tool. Ensure generated tests flow into your test case management system with appropriate metadata and traceability.

- Use AI for initial generation and humans for optimization. Let AI handle volume production of standard scenarios while testers focus on creative edge cases, business logic validation, and test refinement.

- Track metrics that matter including actual defect detection rates, not just test case counts. More tests don't equal better quality; effective tests that find real defects do.

- Plan for continuous learning by feeding test execution results back into AI systems. Quality AI tools improve accuracy over time when informed by which generated tests actually detect defects.

How Does AI-Assisted Compare to Manual Test Creation?

Understanding where each approach excels helps teams allocate effort effectively.

| Aspect | Manual Test Creation | AI-Assisted Test Creation |

| Time per test case | 5-60 minutes depending on complexity | Seconds to minutes for generation, plus review time |

| Coverage breadth | Limited by tester time and attention | Systematically comprehensive for documented requirements |

| Edge case identification | Depends on tester experience | Consistent pattern-based identification |

| Business logic understanding | Strong contextual judgment | Requires clear documentation to match human insight |

| Maintenance effort | High manual updating required | Self-healing capabilities reduce ongoing effort |

| Best application | Complex business logic, exploratory scenarios | High-volume standard scenarios, regression suites |

Most successful teams use hybrid approaches, leveraging AI for volume production while reserving manual effort for tests requiring deep domain expertise.

When Should Teams Adopt AI Test Case Generation?

AI test case generation delivers the strongest ROI for teams facing specific circumstances.

High-Volume Test Requirements

Teams maintaining test suites with hundreds or thousands of test cases benefit most from AI generation. The time savings compound significantly at scale, and AI consistency becomes increasingly valuable as manual oversight of large suites becomes impractical.

Rapid Release Cycles

Organizations practicing continuous delivery need test creation to keep pace with development velocity. AI generation helps teams maintain coverage as features evolve without becoming a bottleneck in the release pipeline.

Documentation-Rich Environments

Teams with mature requirements management practices and well-documented acceptance criteria see the fastest time to value from AI testing tools. The investment in quality documentation pays dividends in AI output accuracy.

Growing Test Automation Initiatives

Organizations expanding their automated testing programs find AI generation accelerates the transition from manual to automated execution. AI can produce test scenarios in automation-ready formats, reducing the effort to build comprehensive automated suites.

Frequently Asked Questions

Can AI completely replace human testers in test case creation? No. AI excels at generating comprehensive standard scenarios and identifying systematic edge cases, but human testers remain essential for validating business logic, designing creative exploratory tests, and ensuring generated tests align with actual quality objectives. The most effective approach combines AI generation with human review and enhancement.

What types of applications benefit most from AI test case generation? Applications with well-documented requirements, complex user interfaces, extensive API surfaces, and high regression testing needs see the strongest benefits. Web applications, enterprise software with numerous business rules, and systems undergoing frequent updates particularly benefit from AI-assisted testing.

How much time does it take to see ROI from AI testing tools? Teams with mature documentation and clear requirements often see measurable time savings within the first month of implementation. Organizations needing to improve requirements quality first may require three to six months before realizing full benefits. Pilot projects help establish realistic expectations before broader deployment.

Do AI-generated tests integrate with existing automation frameworks? Most AI testing tools output tests in standard formats compatible with popular frameworks including Selenium, Playwright, Cucumber, and others. Integration complexity varies by tool, but modern solutions prioritize compatibility with established testing ecosystems.

Start Building Smarter Test Cases Today

AI test case generation represents a practical evolution in how testing teams approach quality assurance. The technology has matured beyond experimental status into a reliable capability that delivers measurable improvements in efficiency and coverage when implemented thoughtfully.

Success requires realistic expectations, quality inputs, and maintained human oversight. Teams that treat AI as a powerful assistant rather than a replacement for testing expertise achieve the strongest outcomes.

For teams ready to explore AI-powered test creation, TestQuality offers a free AI Test Case Builder that integrates directly with unified test management workflows. The platform connects AI-generated tests with execution tracking, defect management, and analytics to help teams realize the full potential of automated test creation. Start your free trial and experience how modern test management transforms quality outcomes.