AI-powered test case design can slash creation time by up to 80%, but manual expertise remains essential for context-rich scenarios that require human judgment.

- AI excels at generating high-volume test cases quickly and detecting edge cases that humans might overlook.

- Manual test design delivers superior results for exploratory testing, complex business logic, and user experience validation.

- Hybrid testing methods, which combine both approaches, consistently outperform either method used alone.

- Successful migration requires clear goals, phased adoption, and ongoing human oversight of AI outputs.

Teams that master the balance between AI efficiency and manual precision will dominate QA productivity in 2026 and beyond.

The debate around manual vs AI test case design has intensified as QA teams face mounting pressure to release faster without sacrificing quality. Generative AI will require 80% of the engineering workforce to upskill through 2027. This statistic alone signals a shift in how testing professionals must approach their craft.

AI is reshaping manual test design. The teams winning right now are strategically deploying both methods based on specific testing needs. Understanding when each approach delivers maximum value separates efficient QA operations from those drowning in technical debt and escaped defects.

What Does Manual vs AI Test Case Design Really Mean?

Before diving into efficiency metrics, let's ground ourselves in what these approaches actually involve. Manual test case design relies on human testers analyzing requirements, user stories, and system specifications to craft test scenarios. This process demands domain expertise, critical thinking, and an intuitive understanding of how users interact with software.

AI test case design leverages machine learning algorithms and large language models to automatically generate test cases from various inputs. These inputs include requirements documents, API specifications, user interface mockups, and historical defect data. The AI analyzes patterns and produces test scenarios that would take human testers much longer to create manually.

The main difference comes down to this: manual design trades speed for nuance, while AI trades nuance for speed. Neither offers a complete solution. Smart teams recognize that effective test case management requires leveraging the strengths of both approaches.

How Does AI Vs Manual Testing Compare?

The efficiency comparison between manual vs AI test case design depends heavily on what you're measuring. Let's break down the key dimensions where each approach shows distinct advantages.

Speed and Throughput

AI dominates when it comes to raw output velocity. Manual test case creation requires careful analysis, domain knowledge, and iterative refinement. AI tools accelerate this process. An AWS case study on generative AI demonstrated test case creation time reductions of up to 80% in automotive software engineering environments.

This speed advantage compounds during regression testing cycles. When application changes require updating dozens or hundreds of existing test cases, AI-powered tools can propagate those changes far faster than manual updates. Self-healing test capabilities automatically adjust scripts when UI elements change, eliminating hours of maintenance work.

However, speed without accuracy creates more problems than it solves. AI-generated test cases frequently require human review to ensure business logic accuracy and relevance. Teams that skip this validation step often end up with bloated test suites full of low-value or redundant scenarios.

Test Coverage and Edge Case Detection

AI shines at identifying edge cases that human testers might overlook. By analyzing vast datasets of user behavior, historical defects, and system interactions, AI can surface test scenarios that wouldn't occur to even experienced testers. This pattern recognition extends test coverage into corners of the application that manual approaches often miss.

Manual testing excels at exploratory scenarios where human intuition detects anomalies that don't fit predefined patterns. Experienced testers develop instincts about where bugs hide based on years of hands-on experience. This tacit knowledge proves difficult to replicate in AI models, regardless of how much training data they consume.

The coverage question also depends on the test type. AI proves highly effective for functional testing with clear inputs and outputs. For usability testing, accessibility validation, and complex user journey scenarios, manual approaches still deliver superior results.

Maintenance and Scalability

Test maintenance is one of the most impactful hidden costs in QA operations. AI-powered tools with self-healing capabilities reduce this burden by automatically updating test scripts when application changes occur. This scalability advantage becomes vital for teams managing large test suites across multiple environments.

Manual test maintenance scales linearly with team size, and often non-linearly with application complexity. As codebases grow, the effort required to keep test cases current can consume resources that should focus on new feature validation. Teams relying purely on manual approaches frequently face difficult choices between test coverage and release timelines.

| Factor | Manual Test Design | AI Test Design |

| Speed | Hours to days per comprehensive suite | Up to 80% faster than manual |

| Edge Case Detection | Limited by tester experience | Pattern-based, comprehensive |

| Context Understanding | Excellent | Requires validation |

| Maintenance Effort | High, scales with suite size | Low with self-healing |

| Initial Setup | Minimal | Moderate to high |

| Business Logic Accuracy | High | Variable, needs review |

| Cost at Scale | Linear increase | Marginal increase |

When Should Teams Choose Manual Testing Over AI?

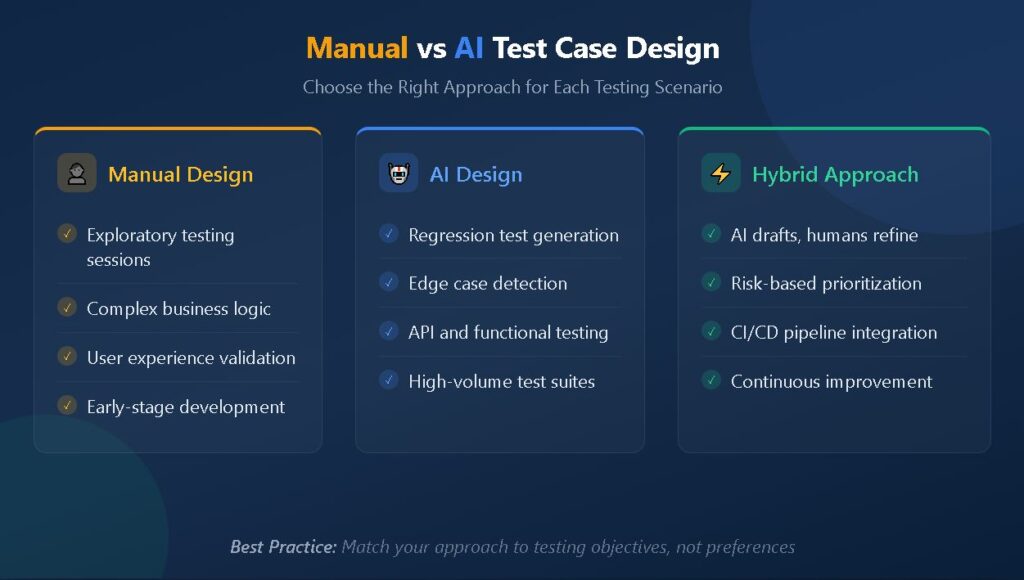

Despite AI's efficiency advantages, manual test case design remains essential for specific scenarios where human judgment delivers irreplaceable value. Understanding these situations helps teams effectively allocate resources.

Exploratory testing demands human creativity and intuition. When investigating unknown system behaviors, discovering unexpected defects, or validating user experience quality, manual testers bring cognitive flexibility that AI can't match. These sessions often uncover critical issues that scripted tests would never find.

Complex business logic validation benefits from manual approaches. When test scenarios require deep understanding of regulatory requirements, financial calculations, or industry-specific workflows, human testers with domain expertise consistently outperform AI-generated alternatives. The cost of AI misunderstanding subtle business rules often exceeds the time saved in test creation.

User experience testing requires human empathy. Evaluating whether an application feels intuitive, whether error messages provide helpful guidance, and whether workflows make sense to end users demands human perception. AI can verify functional correctness, but it cannot evaluate subjective quality factors.

Early-stage product development favors manual approaches. When requirements remain fluid and the product vision evolves rapidly, investing heavily in AI-generated test suites creates waste. Manual testing provides the flexibility to adapt quickly without maintaining test infrastructure that may become obsolete.

How Do QA Teams Implement Hybrid Testing Methods?

The most sophisticated QA organizations have moved beyond the AI vs manual testing debate entirely. They've embraced hybrid testing methods that combine both approaches based on testing objectives, project phase, and risk profile.

Hybrid approaches typically use AI to generate initial test case drafts, which human testers then review, refine, and augment with context-specific scenarios. This workflow captures AI's speed advantage while ensuring business logic accuracy and comprehensive coverage. The result often exceeds what either approach could achieve independently.

The human role in hybrid testing methods shifts from test creation to test curation. Rather than writing every test case from scratch, testers focus on writing effective test cases that address gaps in AI-generated coverage, validating AI outputs, and contributing domain expertise that improves AI model performance over time.

Risk-based prioritization guides how teams blend approaches. High-risk features with significant business impact warrant more manual attention. Lower risk areas with stable requirements can rely more heavily on AI-generated tests. This allocation ensures human expertise concentrates where it delivers maximum value.

Integration with CI/CD pipelines enables continuous hybrid testing. AI handles rapid feedback loops for regression scenarios while manual testers focus on new feature validation and exploratory sessions. This division of labor maximizes QA productivity without sacrificing thoroughness.

What Are Migration Tips for Adopting AI Test Case Design?

Transitioning from purely manual approaches to AI-augmented testing requires thoughtful planning. Teams that rush adoption often experience disappointment when AI fails to meet inflated expectations. These migration tips help organizations capture AI's benefits while avoiding common pitfalls.

- Start with clear, limited objectives. Don't attempt to AI-enable your entire test suite simultaneously. Choose a specific area, like regression testing or API validation, where AI demonstrates proven value. Success in a limited scope builds organizational confidence for broader adoption.

- Establish baseline metrics before implementation. Measure current test creation time, coverage percentages, defect escape rates, and maintenance effort. Without baselines, you can't objectively evaluate whether AI delivers promised improvements.

- Invest in data quality. AI test generation quality directly correlates with input quality. Clean requirements documentation, well-structured user stories, and organized historical test data improve AI outputs.

- Maintain human oversight throughout. Every AI-generated test case should receive human review before entering production test suites. This validation catches hallucinations, logical errors, and missing business context that AI models frequently produce.

- Plan for the learning curve. Team members need time to develop prompt engineering skills, understand AI tool capabilities and limitations, and establish effective human-AI collaboration workflows. Budget training time into your migration timeline.

- Iterate and refine continuously. Initial AI implementations rarely achieve optimal results. Treat adoption as an ongoing improvement process where teams refine prompts, adjust configurations, and evolve workflows based on accumulated experience.

FAQ

What is the main difference between manual and AI test case design? Manual test case design relies on human testers analyzing requirements and crafting scenarios based on domain expertise and intuition. AI test case design uses machine learning to automatically generate test cases from inputs like requirements documents, API specs, and historical data. Manual approaches excel at contextual understanding, while AI delivers speed and pattern-based coverage.

Can AI completely replace manual test case design? No. AI cannot replicate human judgment for exploratory testing, complex business logic validation, and user experience assessment. Most experts recommend hybrid testing methods that combine AI efficiency with human expertise for optimal results. AI serves best as an amplifier for human testers rather than a replacement.

How much faster is AI test case generation compared to manual methods? AI can reduce test case creation time by up to 80% compared to manual methods, according to AWS research on generative AI in software testing. However, AI outputs typically require human review for accuracy, which adds time back into the overall process.

What are hybrid testing methods? Hybrid testing methods combine manual and AI approaches based on testing objectives and risk profiles. Typically, AI generates initial test case drafts that human testers review, refine, and supplement with context-specific scenarios. This approach captures AI's speed while ensuring accuracy and comprehensive coverage.

Build Your Testing Strategy for the Future

The manual vs AI test case design question doesn't have a universal answer. What works brilliantly for one team may fail completely for another based on application complexity, team expertise, and organizational constraints. The winning strategy involves an honest assessment of where each approach delivers value in your specific context.

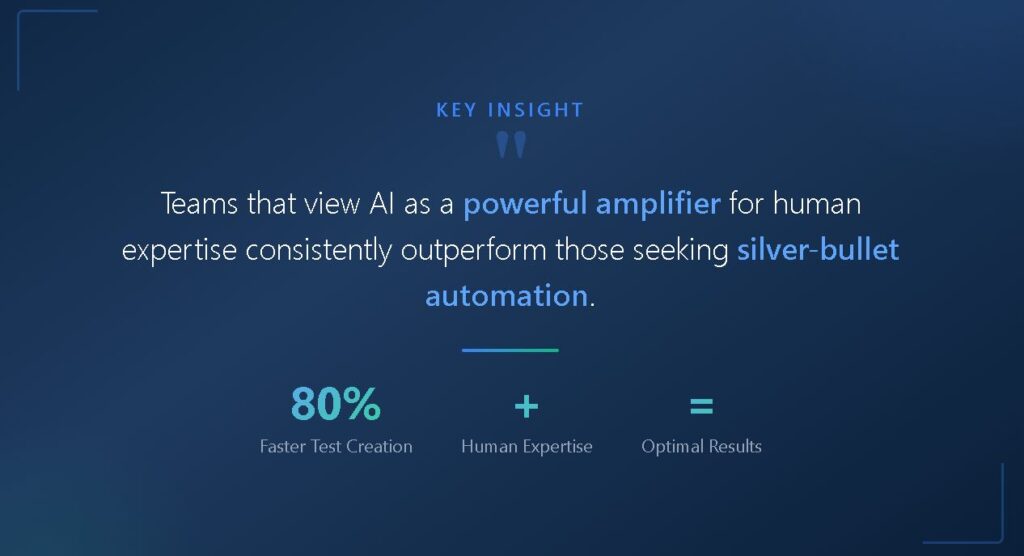

QA productivity gains come from intelligent combination rather than wholesale replacement. Teams that view AI as a powerful amplifier for human expertise consistently outperform those seeking silver-bullet automation. The future belongs to hybrid testing approaches that harness AI efficiency while preserving human judgment where it matters most.

Managing this complexity requires robust test management infrastructure that supports both manual and automated workflows seamlessly. TestQuality provides the unified platform QA teams need to orchestrate hybrid testing strategies, track results across approaches, and maintain visibility into overall quality metrics. Start your free trial today and discover how modern test management accelerates your team's evolution toward AI-augmented quality assurance.