Key Takeaways

Well-crafted gherkin test cases serve as living documentation that bridges technical teams and business stakeholders while remaining easy to update as requirements evolve.

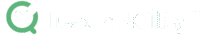

- Declarative scenarios describing behaviors rather than implementation details survive UI changes without rewrites

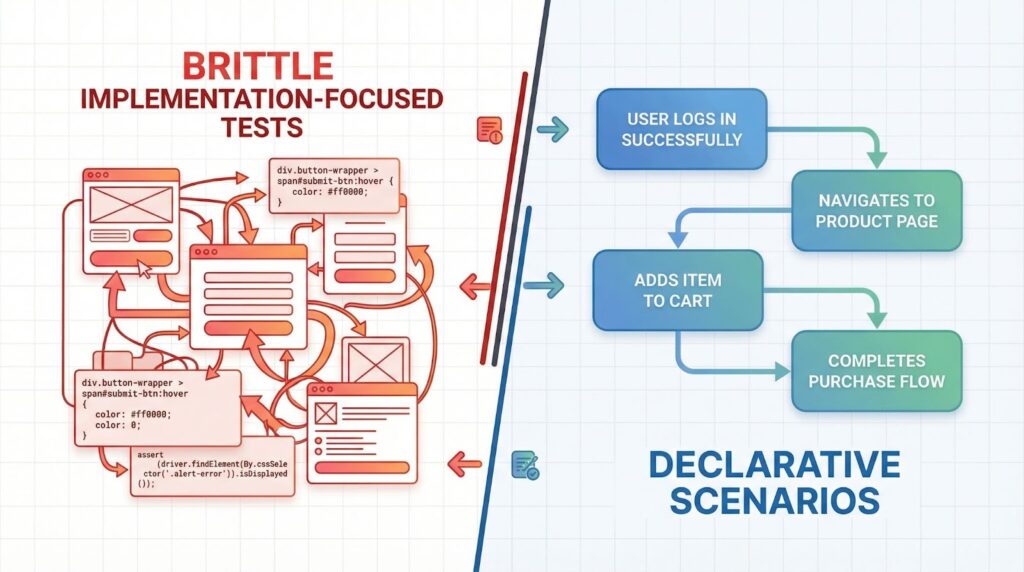

- Independent, single-behavior scenarios reduce debugging time and prevent cascading test failures

- Consistent terminology and step reuse cut maintenance effort by eliminating duplicate code

- Consider integrating your Gherkin workflow with a unified test management platform to maintain traceability from requirements through execution.

BDD testing has become a cornerstone of modern quality assurance, with over 60% of agile teams now using behavior-driven development to align software behaviors with user needs. Yet many teams struggle with test suites that grow brittle and expensive to maintain over time. The problem rarely lies with gherkin syntax itself. Poor maintainability typically stems from scenarios that focus on implementation mechanics rather than business value, inconsistent language that prevents step reuse, and scenarios that test multiple behaviors simultaneously.

Writing gherkin test cases that remain valuable months or years after creation requires intentional design choices. Teams that master these principles find their BDD investments compound over time rather than becoming technical debt. The scenarios become true living documentation that evolves alongside the product.

Why Do Gherkin Test Cases Become Unmaintainable?

Understanding the root causes of maintenance pain helps teams avoid common traps. Most unmaintainable test suites share recognizable patterns that accumulate gradually until the cost of updating tests rivals the cost of developing features.

The most pervasive issue involves scenarios written at the wrong level of abstraction. When tests describe clicking specific buttons, entering text into particular fields, and waiting for specific elements to appear, every UI change requires scenario updates. A simple redesign that moves a button or renames a form field can break dozens of scenarios that were technically passing before the change.

The Implementation Detail Trap

Consider a login test that specifies navigating to a URL, locating an element by CSS selector, entering credentials character by character, and clicking a button with a specific ID. This test validates login functionality, but it does so by encoding implementation assumptions that will change. When the development team adopts a new frontend framework or redesigns the authentication flow, these tests become obstacles rather than safety nets.

The alternative approach describes the same behavior without implementation coupling. A scenario stating that a registered user can access their dashboard after providing valid credentials captures the business requirement. The underlying step definitions handle the mechanics, and those can change without touching the feature files.

Scenario Coupling and Order Dependencies

Another maintainability killer emerges when scenarios depend on each other. If scenario B assumes scenario A has already run and created certain data, any failure in A causes B to fail even when B's underlying functionality works correctly. Debugging becomes a nightmare of tracing dependencies across multiple scenarios.

Independent scenarios can execute in any order, making parallel execution possible and failure investigation straightforward. Each scenario sets up its own preconditions, executes one behavior, and cleans up afterward. This isolation requires more setup code in step definitions but dramatically reduces investigation time when tests fail.

What Makes Gherkin Test Cases Easy to Maintain?

Maintainable gherkin test cases share characteristics that compound their value over time. These scenarios read naturally to stakeholders unfamiliar with the system, execute reliably in any order, and require minimal updates when requirements or implementations evolve.

The foundation of maintainability lies in behavior-driven development principles that prioritize communication over automation. Scenarios serve first as specifications that align teams around expected behaviors, and second as automated validation that those behaviors work correctly. When teams invert this priority, optimizing for automation convenience over readability, they sacrifice the collaborative benefits that make BDD testing valuable.

The Declarative Approach

Declarative scenarios describe what the system should do without prescribing how users accomplish it. Instead of documenting button clicks and form submissions, declarative scenarios capture business rules and user outcomes. This abstraction level keeps scenarios relevant even when implementation details change significantly.

A declarative scenario for shopping cart functionality might state that adding an item increases the cart count and updates the total. The scenario doesn't specify which button users click, what animation plays, or how the frontend communicates with the backend. Those details live in step definitions where they can change without affecting the scenarios that business stakeholders review and approve.

Single Responsibility for Scenarios

Each scenario should test exactly one behavior. This principle mirrors the single responsibility concept from software design. When a scenario covers multiple behaviors, failures become ambiguous. Did the test fail because feature A broke, feature B broke, or some interaction between them introduced a problem?

Single-behavior scenarios also improve documentation value. Stakeholders can scan scenario names to understand system capabilities without reading step details. A feature file with twenty focused scenarios provides clearer documentation than five sprawling scenarios that each cover multiple capabilities.

How Should You Structure Gherkin Syntax for Readability?

Gherkin syntax offers flexibility that teams sometimes exploit in ways that harm readability. Establishing consistent patterns across feature files helps everyone on the team write and review scenarios effectively. These conventions reduce cognitive load and enable effective Gherkin test authoring.

Third-person perspective keeps scenarios consistent and professional. Rather than mixing "I click the button" with "the user clicks the button," teams should standardize on third-person throughout. This consistency makes scenarios read like specifications rather than personal instructions.

Step Phrasing Conventions

Steps should read as complete sentences with clear subjects and predicates. Abbreviating steps for convenience often backfires when new team members struggle to understand abbreviated phrasing. The extra words that make steps grammatically complete also make them self-documenting.

Given steps establish context and preconditions. They describe the world as it exists before the behavior under test occurs. When steps capture actions and events. Then steps assert outcomes and changed states. Mixing these purposes within a single keyword creates confusion about what the scenario actually tests.

Background Sections for Common Setup

When multiple scenarios in a feature share identical Given steps, Background sections eliminate repetition. The background runs before each scenario, establishing shared preconditions without duplicating lines across every scenario. However, backgrounds should remain brief. Lengthy backgrounds obscure what individual scenarios actually test.

The key constraint on backgrounds involves scenario independence. Background steps should establish context rather than create dependencies. If removing a scenario would break other scenarios because they relied on something that scenario's steps created, the feature file has coupling problems that no amount of background optimization will fix.

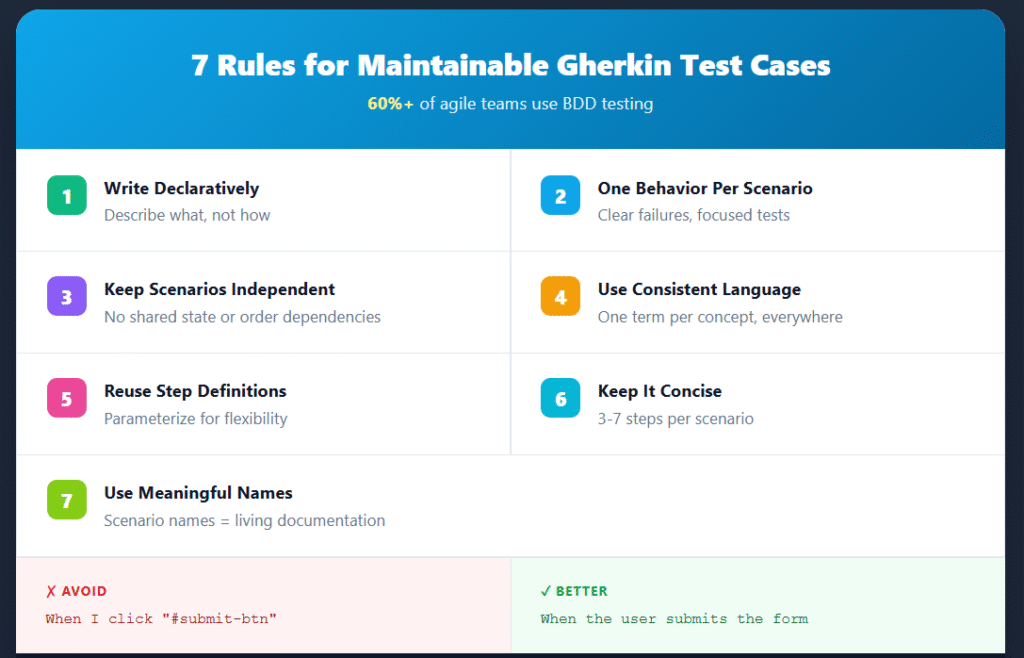

What Are the Essential Rules for Maintainable Gherkin Test Cases?

Teams that follow these principles consistently build test suites that remain valuable as products evolve. Each rule addresses a specific maintainability challenge that commonly undermines BDD investments.

- Write declaratively, not imperatively. Describe behaviors and outcomes rather than UI interactions. Step definitions handle the mechanics; scenarios capture the requirements.

- One behavior per scenario. The cardinal rule of BDD ensures clear failure signals and readable documentation. Split scenarios that cover multiple behaviors into focused individual scenarios.

- Maintain scenario independence. Every scenario must succeed regardless of execution order. Never assume another scenario has already run or created necessary data.

- Use consistent language. Standardize terminology across all feature files. When "customer," "user," and "account holder" mean the same thing, pick one term and use it everywhere.

- Reuse step definitions. Generic steps like "the user is logged in" serve multiple scenarios better than scenario-specific variants. Parameterize steps to maximize reuse without sacrificing clarity.

- Keep scenarios concise. Aim for three to seven steps per scenario. Longer scenarios typically cover multiple behaviors or include unnecessary detail that belongs in step definitions.

- Use meaningful names. Scenario names should describe the behavior tested. Someone reading only scenario names should understand what capabilities the feature provides.

How Do Good and Bad Gherkin Practices Compare?

Understanding the contrast between maintainable and problematic patterns helps teams recognize issues in their own test suites. The following table illustrates common patterns and their implications for long-term maintenance:

| Practice | Poor Approach | Maintainable Approach | Why It Matters |

| Abstraction Level | Click button ID "submit-form-btn" | Submit the registration form | Survives UI redesigns |

| Scenario Scope | Test login, navigation, and profile in one scenario | Separate scenarios for each behavior | Clear failure diagnosis |

| Data Setup | Assume previous test created user | Each scenario creates its own test user | Enables parallel execution |

| Step Phrasing | "Enter 'test@email.com'" | "The user enters their email address" | Improves readability |

| Scenario Names | "Test 1" or "Login test" | "Registered user can access dashboard" | Documents system capabilities |

| Given/When/Then Usage | Multiple When-Then pairs | Single When-Then per scenario | Maintains focus on one behavior |

Teams can audit existing feature files against these patterns to identify improvement opportunities. Refactoring even a portion of problematic scenarios improves overall suite maintainability.

What Mistakes Kill Gherkin Test Case Maintainability?

Even experienced BDD practitioners fall into patterns that create maintenance burdens. Recognizing these anti-patterns helps teams course-correct before technical debt accumulates.

Overly Technical Language

Scenarios filled with CSS selectors, API endpoints, database table names, and other technical artifacts alienate business stakeholders and couple tests to implementation. The stakeholders who should validate that scenarios capture correct business rules cannot understand technical scenarios, defeating BDD's collaborative purpose.

Technical details belong in step definitions where developers maintain them alongside application code. Scenarios remain in business language that product owners and analysts can review meaningfully. This separation also improves maintainability because implementation changes only require step definition updates.

Scenario Bloat and Coverage Obsession

The temptation to test every possible input combination leads to massive feature files that become impossible to maintain. Scenario outlines with dozens of example rows often indicate testing at the wrong level. Edge cases and boundary conditions frequently belong in unit tests where they execute faster and provide more precise feedback.

Strategic test planning reserves Gherkin scenarios for behaviors that stakeholders care about. Technical edge cases that don't represent real user situations add maintenance burden without providing proportional business value. Focus on scenarios that would appear in product documentation and align with cucumber framework best practices.

Hardcoded Test Data

Embedding specific email addresses, product names, or other data directly in scenarios creates brittleness. When the test environment changes or data gets cleaned up, scenarios fail for reasons unrelated to product functionality.

Parameterized steps with meaningful placeholders communicate intent without hardcoding specific values. The step definition retrieves or generates appropriate test data dynamically. This approach also enables running the same scenarios against different environments with different data configurations.

How Can You Scale BDD Testing Effectively?

As test suites grow, additional practices help teams manage complexity while preserving maintainability benefits. These techniques build on foundational practices to support larger-scale BDD implementations.

Tags for Selective Execution

Tags enable running subsets of scenarios based on characteristics like feature area, priority, or test type. A CI pipeline might run smoke tests on every commit but reserve comprehensive regression suites for nightly builds. Tags make this selective execution possible without maintaining separate feature files.

Tags also support filtering during development. When working on authentication features, developers can run only authentication-related scenarios rather than waiting for the entire suite. This faster feedback loop encourages running tests frequently.

Data Tables for Complex Inputs

When scenarios involve structured data like user profiles or product catalogs, data tables express that information clearly. Rather than multiple Given steps establishing individual attributes, a data table presents all attributes together in a readable format.

Data tables work particularly well with scenario outlines, enabling the same scenario structure to run against multiple data configurations. The cucumber framework processes each table row as a separate scenario execution, providing clear feedback about which data combinations pass or fail.

Integration with CI/CD Pipelines

Gherkin test cases deliver maximum value when integrated into continuous integration workflows. Automated execution on every code change catches regressions before they reach production. According to recent quality engineering research, 77% of organizations now invest in automation-driven quality processes.

Pipeline integration requires reliable scenarios that don't produce false failures. Flaky tests that sometimes pass and sometimes fail erode team confidence in the test suite and often get ignored rather than investigated. Maintainable scenarios tend to be more reliable because their focused scope reduces the surface area for environmental issues.

FAQ

How many steps should a Gherkin scenario contain? Most maintainable scenarios contain three to seven steps. Scenarios shorter than three steps may lack sufficient context, while scenarios exceeding seven steps typically cover multiple behaviors and should be split. The goal is capturing one complete behavior with enough context for stakeholders to understand what's being tested.

Should non-technical team members write Gherkin scenarios? Business analysts and product owners can contribute effectively to scenario writing, particularly the Given-When-Then structure that captures requirements. However, collaboration works better than delegation. The "three amigos" approach brings together product, development, and QA perspectives, while AI-powered tools like TestStory.ai can assist non-technical members by automatically converting plain English requirements into perfectly formatted Gherkin syntax.

When should I use Scenario Outline vs. multiple separate scenarios? Scenario Outlines work well when testing the same behavior with different data inputs, such as validating form fields with various invalid entries. Separate scenarios are preferable when the behaviors differ meaningfully, even if they share some structure. The test of whether to use an outline is whether stakeholders would consider the examples to be variations of one behavior or distinct behaviors.

How do I handle scenarios that require complex setup? Complex setup often indicates testing at the wrong level. Consider whether unit tests or integration tests might validate the same functionality with simpler setup. When BDD scenarios genuinely require complex preconditions, encapsulate that complexity in well-named step definitions rather than lengthy Given sections. Background steps can share common setup across scenarios within a feature.

Build a Sustainable BDD Practice

Writing maintainable gherkin test cases requires upfront investment in establishing patterns, training team members, and sometimes refactoring existing scenarios. That investment pays dividends through reduced maintenance burden, better collaboration between technical and business stakeholders, and test suites that remain valuable as products evolve.

The principles covered here apply regardless of which cucumber framework your team uses or which test management approach you've adopted. Teams practicing these patterns find that their BDD efforts compound over time rather than becoming technical debt that slows development.

For teams looking to evolve their BDD practice, TestQuality delivers AI-Powered QA designed for modern DevOps workflows. Leverage powerful QA agents to create and run Gherkin test cases, and analyze results automatically through our TestStory.ai integration. Start your free trial to see how accelerating software quality with AI agents transforms BDD testing from scattered feature files into a coordinated, intelligent strategy.