The promise of AI-generated code is undeniably exciting. Developers are already leveraging tools to rapidly prototype, automate boilerplate, and even solve complex logical problems with unprecedented speed. But for every line of code an AI writes, a critical question emerges: How do we know it works? How do we ensure its quality, reliability, and adherence to requirements?

This isn't just about catching a stray bug; it's about integrating AI-generated code responsibly into our development pipelines. The speed of AI generation can quickly outpace our traditional verification methods, introducing new pain points for QA professionals and developers alike.

The New Frontier of Code Quality: AI's Unique Challenges

While AI offers immense efficiency gains, it also presents distinct challenges for quality assurance:

- The "Black Box" Dilemma: AI models are complex, and their decision-making process for generating code isn't always transparent. This can make it difficult to understand why a particular piece of code was generated, complicating debugging and root cause analysis.

- Subtle Flaws and Edge Cases: AI excel at common patterns, but subtle logical errors, security vulnerabilities, or failures in obscure edge cases can be easily missed. These often require a nuanced, human understanding of context and domain-specific knowledge.

- Keeping Up with the Pace: If an AI can generate thousands of lines of code in minutes, how do we efficiently test and validate this output without becoming a bottleneck ourselves? Manual review simply won't scale.

- Maintaining Consistency and Standards: Ensuring AI-generated code adheres to established coding standards, architectural patterns, and performance benchmarks requires a robust, automated approach.

These challenges highlight the need for a sophisticated, human-in-the-loop verification strategy that empowers development teams to harness AI's power without compromising quality.

Bridging the Gap: Human-in-the-Loop with Smart Verification

The solution isn't to distrust AI, but to integrate intelligent verification processes that leverage human expertise where it matters most. This is where a robust test management strategy, particularly one focused on shift-left principles and clear communication, becomes indispensable.

Imagine a scenario: an AI assistant generates a new feature's foundational code. Instead of simply accepting it, a streamlined process kicks in:

- Automated Sanity Checks: Immediate static analysis, linting, and basic unit tests run automatically as part of the initial CI/CD pipeline.

- Contextualized Review: The generated code, along with the original prompt or requirement, is presented for review. This is where a tool that integrates seamlessly with your development workflow shines.

- Targeted Test Case Generation: Based on the AI-generated code, human testers or even other AI tools can then focus on generating more complex, scenario-based tests, particularly those addressing edge cases or business-critical logic. This is an area where behavior-driven development (BDD) with Gherkin can be incredibly powerful, allowing teams to articulate expected behaviors in a human-readable format that even AI can learn from.

The goal is to create a feedback loop where AI assists in code generation, and intelligent testing confirms its validity, continuously improving both the code and the AI model itself.

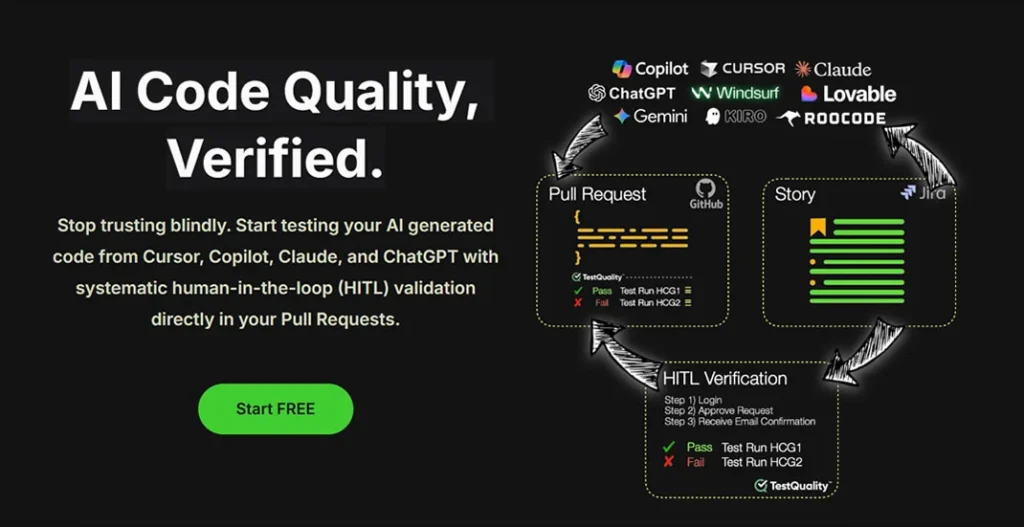

TestQuality: Your Partner in AI Code Verification

At TestQuality, we understand these evolving needs. Our platform is designed to provide the clarity and control necessary to integrate AI-generated code confidently into your projects.

Here's how TestQuality helps you navigate the AI code revolution:

- Seamless Pull Request Verification: Integrate test plans directly into your GitHub, GitLab, or Bitbucket pull request workflows. When AI-generated code arrives, TestQuality provides a clear overview of associated tests, their status, and any failures, enabling rapid human review and iteration.

- Behavior-Driven Development (BDD) with Gherkin: Define expected behaviors in plain language using Gherkin syntax. This not only clarifies requirements for AI models (when used for test generation) but also provides an unambiguous benchmark for verifying AI-generated code. TestQuality's robust Gherkin support helps you manage these living specifications.

- The Human-in-the-Loop with Gherkin: For AI-generated code, Gherkin scenarios act as a vital bridge. They clearly articulate what the code should do, allowing human testers to quickly identify discrepancies or missing functionality in the AI's output. TestQuality facilitates the creation, execution, and reporting of these Gherkin-based tests, ensuring every piece of AI-generated code meets defined expectations.

- Comprehensive Test Management: Beyond individual code snippets, TestQuality offers a holistic view of your testing efforts. Manage test cases, link them to requirements, track execution, and gain insights into your overall quality posture, even as AI accelerates your development cycle. This ensures that even rapidly generated code is part of a thoroughly tested and validated product.

The era of AI-generated code is here, and it promises to transform software development. By adopting intelligent verification strategies and leveraging tools like TestQuality, QA professionals and developers can confidently embrace this future, ensuring that speed never comes at the expense of quality.

TestQuality's Exclusive GitHub PR Testing

TestQuality isn't just another test management tool; it's designed to transform your PR review process into a robust AI code validation hub. Our exclusive GitHub PR testing feature is specifically built to ensure that every PR—especially those containing AI-generated code—is thoroughly tested and verified before it merges into your main branch.

- Streamlined Workflow: TestQuality's deep integration with GitHub streamlines your development workflow. It ensures that all changes made within a PR are rigorously tested before being merged, maintaining the integrity and quality of your main branch.

- Unified Test Management in the PR: Beyond automated checks, TestQuality provides a unified platform directly within your PRs, enabling true human-in-the-loop validation:

- Execute Manual Test Cases: You can directly create and execute manual test cases against the code in a specific PR branch. For AI-generated code, this is absolutely critical for verifying complex logic, handling subtle edge cases, and conducting in-depth security reviews that automated tools might miss.

- Conduct Exploratory Testing: Allow your team to perform ad-hoc, unscripted testing specifically targeting the AI-generated parts of the code, uncovering unexpected behaviors or vulnerabilities.

- Track Automated Test Results: See all results from your CI/CD pipeline (unit, integration, end-to-end tests) presented alongside manual reviews in one cohesive view within the PR.

- Contextual Feedback & Annotation: Developers can easily leave specific feedback, questions, or annotations on particular AI-generated code blocks directly within the PR, facilitating clear communication and efficient resolution tracking.

- Clear Pass/Fail Metrics: Ensure no AI-generated code merges until it meets your verified quality standards.

- Audit Trails: Maintain a comprehensive history of all validation efforts, providing transparency for compliance and future reference.

TestQuality's integration with GitHub PR ensures that every PR is thoroughly tested before being merged into the main branch.

By integrating this level of granular, human-led testing directly into your Pull Request workflow, TestQuality empowers your team to confidently leverage the speed of AI while eliminating the risks of blind trust.

Ready to bring smarter verification to your AI-powered development? Explore TestQuality's features and see how we can help you build with confidence.