AI transforms CI/CD testing from reactive bug detection into proactive quality assurance that accelerates release cycles while improving software reliability.

- AI-powered test generation and self-healing scripts reduce maintenance overhead, allowing QA teams to focus on exploratory testing and edge cases.

- Native Git integrations create seamless feedback loops between code commits and test execution, catching defects before they reach production.

- DevOps AI testing enables intelligent test prioritization, running the most relevant tests first based on code change analysis.

- Organizations implementing mature automated QA pipelines report deployment frequency increases of 200% with fewer production incidents.

Start embedding AI into your testing workflows now because teams that wait will struggle to match the velocity of competitors who already have.

Continuous integration and continuous deployment pipelines have become the backbone of modern software delivery. According to Gartner's Market Guide for AI-Augmented Software Testing Tools, 80% of enterprises will have integrated AI testing tools into their software engineering toolchains by 2027. This rapid adoption reflects how quality assurance teams now approach testing within DevOps workflows.

The traditional approach of scripting tests manually and running them sequentially can't keep pace with daily deployments and microservices architectures. AI in CI/CD testing introduces capabilities that adapt to code changes, predict potential failures, and optimize test execution in ways that static automation never could. Development teams using GitHub and Jira workflows particularly benefit from these advances, as intelligent testing systems can automatically analyze pull requests, understand requirement changes, and generate relevant test cases.

This guide covers practical strategies for implementing AI across your automated QA pipelines while maximizing the value of your existing Git integrations.

What Makes AI in CI/CD Testing Different from Traditional Automation?

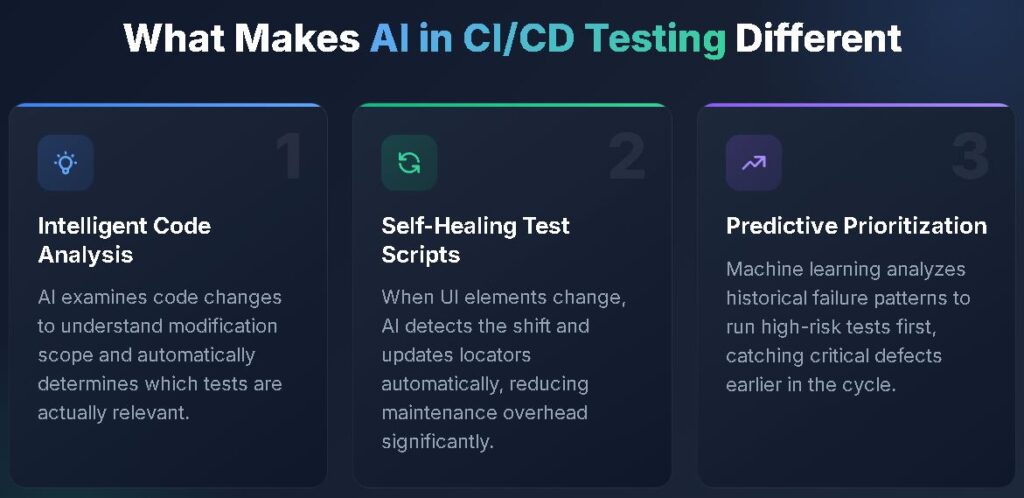

Traditional test automation relies on predefined scripts that execute the same steps regardless of what code changed. When a developer modifies a login form, the entire regression suite runs, wasting compute resources on unrelated tests. AI in CI/CD testing changes this equation by understanding code relationships and making intelligent decisions about test execution.

Machine learning models analyze code commits to determine which tests are actually relevant to specific changes. If a pull request modifies payment processing logic, AI prioritizes financial transaction tests while deprioritizing unrelated UI tests. This targeted approach can reduce test execution times without sacrificing coverage quality.

How Does AI Handle Test Maintenance?

Self-healing capabilities are one of the most practical advances in DevOps AI testing. Traditional scripts break when element locators change, forcing QA engineers to constantly update selectors and fix flaky tests. AI-powered testing tools recognize UI changes and automatically adjust locators, reducing maintenance overhead.

Consider what happens when a developer renames a button class from "submit-btn" to "action-submit." Traditional Selenium scripts fail immediately, creating noise in your pipeline and blocking deployments. AI systems recognize the visual and contextual similarity, automatically update the locator, and flag the change for human review. This self-healing behavior keeps automated QA pipelines flowing while capturing meaningful failures.

What About Test Generation?

Beyond maintaining existing tests, AI now generates new test cases from requirements, user stories, and production usage patterns. Natural language processing enables teams to describe expected behavior in plain English, which AI translates into executable test scripts. This capability accelerates coverage expansion while ensuring tests align with actual business requirements rather than developer assumptions about how features should work.

How Can Teams Implement AI-Driven Test Generation Effectively?

Implementing AI test generation requires thoughtful integration with existing development workflows rather than wholesale replacement of current practices. The most successful teams treat AI as an accelerator for human expertise, not a replacement.

Start by identifying repetitive test creation tasks that consume significant QA time. User registration flows, form validations, and CRUD operations often follow predictable patterns that AI handles exceptionally well. Feed your requirement documents and user stories into AI generation tools, then have experienced testers review and refine the output. This human-in-the-loop approach combines AI efficiency with human judgment about edge cases and business logic nuances.

Connecting AI Generation to Git Workflows

Integration with version control creates powerful automation opportunities. When developers create pull requests, AI systems can analyze the changed code and automatically generate relevant test cases. These generated tests become part of the PR review process, ensuring coverage exists before code merges into main branches.

Teams using GitHub test management workflows can trigger AI generation directly from issue creation. When a product manager writes a user story describing new functionality, AI generates preliminary test cases that QA refines during sprint planning. This shift-left approach surfaces testing considerations earlier in development, reducing late-cycle surprises.

Managing AI-Generated Test Quality

Not every AI-generated test deserves inclusion in your suite. Establish clear quality criteria and review processes for generated content. Effective criteria typically include:

- Tests must map to documented requirements or acceptance criteria

- Generated assertions should validate meaningful business outcomes

- Test data should reflect realistic production scenarios

- Execution time must remain within acceptable pipeline limits

Treat AI generation as draft content requiring human curation rather than production-ready automation.

What Are the Essential Git Integrations for Automated QA Pipelines?

Git integrations form the connective tissue between code changes and test execution. Without tight coupling between version control and testing infrastructure, teams miss opportunities for intelligent automation and fast feedback loops.

Pull request testing is a critical integration point. When developers push code, automated QA pipelines should trigger immediately, running relevant tests against the proposed changes. Results appear directly in the PR interface, giving developers immediate visibility into quality impacts without switching contexts between tools.

Bidirectional Synchronization Between Testing and Development Tools

One-way data flow from Git to testing tools provides limited value. True integration requires bidirectional synchronization where test results, defects, and coverage metrics flow back into development workflows. When a test fails, the associated defect should automatically link to the relevant commit and notify the responsible developer.

This synchronization extends to Jira and other issue trackers. Test failures create linked issues, test coverage maps to user stories, and release readiness dashboards aggregate quality metrics across both systems. Development and QA teams share a single source of truth rather than maintaining separate views of project status.

Branch-Based Test Strategies

Sophisticated Git integrations support different testing strategies for different branch types. Feature branches might run focused unit and integration tests, while releases to staging trigger comprehensive end-to-end suites. AI enhances these strategies by analyzing branch purpose and code content to recommend an appropriate test scope.

| Branch Type | Recommended Test Scope | AI Enhancement |

| Feature branches | Unit tests, affected integration tests | AI identifies impacted components and prioritizes relevant tests |

| Development/main | Full regression, smoke tests | AI predicts high-risk changes needing deeper coverage |

| Release candidates | End-to-end, performance, security | AI validates deployment readiness based on historical patterns |

| Hotfix branches | Targeted regression, sanity checks | AI fast-tracks critical path testing |

This branch-aware approach prevents pipeline bottlenecks while ensuring appropriate rigor at each stage.

How Do You Build an Effective AI in CI/CD Testing Strategy?

Building effective AI testing strategies requires balancing automation ambition with practical implementation constraints. Teams that attempt to automate everything simultaneously often achieve nothing meaningful. Successful adoption follows incremental patterns that demonstrate value at each stage.

Start With Test Prioritization and Selection

Test prioritization offers the fastest path to measurable value from AI integration. Machine learning models analyze historical test results, code coverage data, and failure patterns to rank tests by relevance and risk. High-priority tests run first, providing faster feedback on likely failure points.

Prioritization also enables intelligent test selection for time-constrained pipelines. Rather than running everything or nothing, AI selects a representative subset that maximizes coverage probability within available time windows. This approach maintains quality gates without blocking rapid iteration during active development.

Implement Continuous Feedback Loops

DevOps AI testing thrives on data. Every test execution generates information that improves future predictions and optimizations. Establish mechanisms to capture test duration, pass/fail patterns, flakiness indicators, and correlation with code changes. AI models continuously learn from this data, becoming more accurate over time.

Feedback loops should extend to human testers as well. When AI recommendations prove incorrect, capture that feedback to refine model behavior. When generated tests miss important scenarios, document the gap to improve future generations. This continuous improvement cycle compounds benefits over months and years of operation.

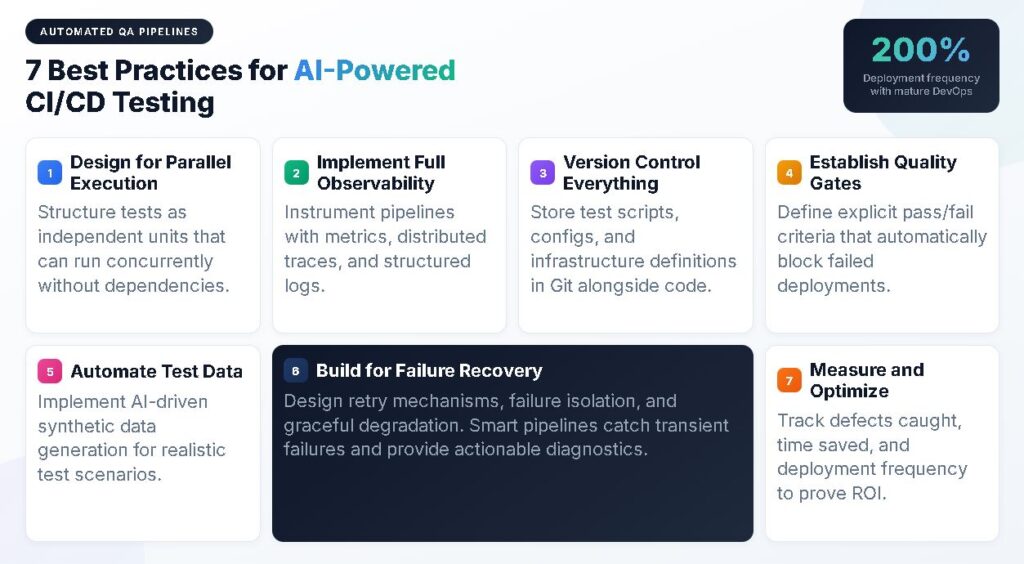

Top 7 Best Practices for Automated QA Pipelines

Implementing AI requires attention to foundational practices that maximize the value of automation. These practices apply whether you are starting fresh or enhancing existing pipelines.

1. Design for Parallel Execution from Day One: Structure tests as independent units that can run concurrently without shared state conflicts. AI optimization becomes far more powerful when tests can be distributed across multiple runners based on intelligent scheduling rather than forced into sequential queues.

2. Implement Comprehensive Observability: Build your pipeline with detailed metrics, traces, and logs. AI systems require rich data to make intelligent decisions about test selection, failure analysis, and optimization opportunities. Blind spots in observability become blind spots in AI effectiveness.

3. Version Control Everything Including Test Infrastructure: Store pipeline configurations, test scripts, and environment definitions in Git alongside application code. This practice enables AI to correlate infrastructure changes with test behavior and supports reliable rollback when changes cause problems.

4. Establish Clear Quality Gates with Automated Enforcement: Define explicit criteria for what constitutes a passing pipeline and automatically enforce them. AI can help set dynamic thresholds based on historical baselines, but human judgment should establish the quality standards those thresholds protect.

5. Invest in Test Data Management: AI-generated tests require realistic test data to provide meaningful validation. Implement automated test data generation, synthetic data creation, and production data anonymization to fuel your testing efforts without compromising privacy.

6. Build for Failure Recovery: Automated QA pipelines will fail, whether from flaky tests, infrastructure issues, or genuine defects. Design retry mechanisms, failure isolation, and graceful degradation to keep pipelines valuable even when individual components misbehave.

7. Measure and Report on Testing ROI: Track metrics that demonstrate testing value: defects caught before production, time saved through automation, deployment frequency improvements, and customer-facing incident rates. These metrics justify continued investment and guide optimization efforts.

How Do You Optimize DevOps AI Testing for Continuous Feedback?

Continuous feedback is the ultimate goal of automated QA pipelines. Every code change should generate rapid, relevant quality signals that inform development decisions. AI optimization focuses on reducing feedback latency while maintaining signal quality.

The Continuous Delivery Foundation's 2024 State of CI/CD Report found that 83% of developers are now involved in DevOps-related activities, with CI/CD tool usage strongly correlated to better deployment performance across all DORA metrics. Teams using well-integrated toolchains consistently outperform those juggling multiple disconnected solutions.

Intelligent Test Execution Ordering

Beyond selecting which tests to run, AI determines optimal execution order. Tests most likely to fail based on code change analysis run first, providing the fastest possible detection of genuine issues. Tests with longer execution times run in parallel background jobs while faster tests complete.

This intelligent ordering can reduce time-to-first-failure from minutes to seconds in well-optimized pipelines. Developers receive actionable feedback before context-switching away from the code they just wrote, dramatically improving fix efficiency.

Predictive Failure Analysis

Advanced AI testing approaches predict failures before tests complete. Machine learning models trained on historical patterns recognize early indicators of impending failures and proactively alert developers. This predictive capability extends to production monitoring, identifying code changes likely to cause incidents based on test execution patterns.

Predictive analysis also identifies flaky tests that pass sometimes and fail randomly. Rather than trusting unreliable results, AI flags flaky tests for quarantine and prioritizes their stabilization. This housekeeping prevents noise from obscuring genuine quality signals.

Connecting Testing Insights to Development Decisions

The most valuable feedback loop connects testing results directly to development planning. When certain components consistently require more fixes or generate more test failures, that information should influence architectural decisions and refactoring priorities. AI can surface these patterns from testing data that would otherwise remain buried in logs.

Teams using AI-powered test management tools gain visibility into testing effectiveness over time. Dashboards reveal coverage trends, highlight undertested areas, and track quality improvements across releases. This visibility transforms testing from a cost center into a strategic capability that accelerates confident delivery.

Frequently Asked Questions

What is the difference between AI testing and traditional test automation? Traditional test automation executes predefined scripts identically regardless of context, while AI testing adapts behavior based on code changes, historical patterns, and intelligent analysis. AI enables capabilities like self-healing locators, dynamic test prioritization, and automatic test generation that static automation can't achieve.

How long does it take to implement AI in CI/CD testing pipelines? Initial AI integration can begin producing value within weeks for targeted use cases like test prioritization. Comprehensive implementation, including generation, prediction, and optimization, typically spans three to six months depending on pipeline complexity and team readiness. Start small with specific problems rather than attempting wholesale transformation.

Do AI testing tools replace QA engineers? AI tools augment rather than replace QA expertise. Engineers shift focus from repetitive maintenance and execution toward exploratory testing, test strategy, and quality architecture. The most effective implementations combine AI efficiency with human insight into business requirements and edge cases.

What infrastructure requirements support AI-enhanced testing? AI testing tools typically require cloud compute resources for model training and inference, integration APIs for connecting with Git and issue tracking systems, and observability infrastructure for collecting test execution data. Most modern CI/CD platforms support these requirements through native integrations or marketplace extensions.

Transform Your QA Pipeline With Intelligent Test Management

AI in CI/CD testing automation creates opportunities for development teams to ship faster without sacrificing quality. Organizations that embrace these practices now will establish advantages that compound over time as AI models improve and testing intelligence accumulates.

Success demands rethinking testing workflows to leverage AI strengths while preserving human judgment where it matters most. Start with high-impact opportunities like test prioritization and self-healing scripts, then expand into generation and prediction as your team gains experience.

TestQuality provides the unified test management foundation that makes AI integration practical, with native GitHub and Jira integrations that connect testing workflows directly to development processes. Start your free trial today and experience how intelligent test management accelerates your path to confident, continuous delivery.