Before you can release your application, you must demonstrate that it functions well and is free of bugs. That proof will necessitate a test plan that incorporates many testing methodologies and employs a range of tests, each of which demonstrates that some component of your application is ready to be deployed. If you're searching for testing tools, you'll want one that supports as many of these testing methodologies as feasible (such as TestQuality).

The Testing Life Cycle

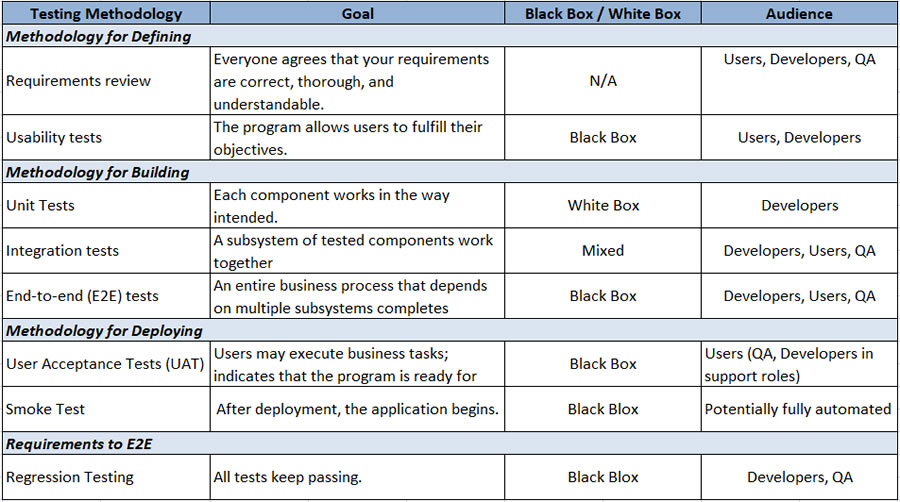

Testing may be divided into eight stages/methodologies:

Defining

(1) Requirements review: This ensures that your requirements are ready to be utilised.

(2) Usability tests: Show that users can achieve their objectives using the program.

Building

(3) Unit tests: Ensure that each component performs as expected.

(4) Integration tests: demonstrate that a subsystem of tested components functions properly.

(5) End-to-end (E2E) testing (sometimes referred to as system tests): Demonstrate that a whole business process that is dependent on many tested subsystems works.

Deploying

(6) User acceptance testing (UAT): demonstrate that users can execute business tasks; signify that the program is ready for deployment.

(7) Smoke test (also known as a sanity check): Verifies that the application starts after deployment.

(8) Regression testing: In addition to these test stages and associated testing methodologies, there is also Regression testing, which demonstrates that previous tests still work when modifications are done. Regression testing is unique in that it not only demonstrates that your application still performs as intended, but that your tests do as well (your tests aren't creating "false positives" by failing when there isn't an issue, for example).

Regression testing begins with the requirements phase and continues as long as changes are made, involving both developers and QA (but not users). Regression testing is your strongest line of defense against software failure, but it is usually only economically feasible if your tests are automated (automating testing also provides consistency, which is important since it assures you're performing the same test you performed previously).

As you can see, this list is completely customisable. Not all teams, for example, will incorporate UAT for every deployment; other teams may employ UAT early in the process as part of having users authorize further work. In addition to deployment, many teams will incorporate smoke testing in the integration, E2E, and UAT stages. It is critical that you employ the correct testing approaches for your application at the right time.

Testing Methodologies for Getting Requirements Correct

Requirements provide the "right" response for your tests and contribute to "the definition of done" in an Agile context. Some requirements-gathering techniques even incorporate "testability" as a requirement description (user stories, for instance).

A requirements review can be used to test requirements (also called validating your requirements). The purpose is to demonstrate that the requirements are coherent, comprehensive, and understood in the same way by all stakeholders. It involves at least users and developers, and it frequently exposes unspoken assumptions held by those two groups. Involving Quality Assurance (QA) at this early stage simplifies testing later on. This stage is shorter with Agile teams because, by working on a product together throughout time, Agile teams effectively practice "continuous validation."

If the program includes a frontend or outputs with which users will engage, developers and users can define such interactions with mock-ups, paper storyboards, or whiteboards. Usability testing also aid in the refinement of user needs by expressing them in a form that users understand: the application's UI.

Identifying Functional and Non-Functional Requirements

Not all needs are generated by the application's users. Many needs, such as dependability, reaction times, and accessibility, apply to a variety of applications (though these requirements may also be set or modified at the project level).

As the number of requirements grows, it may be necessary to categorize them as functional or non-functional. A functional requirement outlines the business functionality of the program ("When an employee ID is input, the timesheet for that employee will be presented"). A non-functional requirement describes how effectively the program meets the functional criteria ("Average response time will be less than three seconds").

The line between the two categories has shifted over time; for example, security and privacy needs are now more likely to be categorized as functional rather than non-functional requirements. As a result, some teams will consider a requirement such as "An employee will not be able to access another employee's timesheet" as non-functional, whilst other teams would classify it as functional. However, no matter how a need is classified, it must be handled throughout testing.

The majority of non-functional requirements are tested throughout the integration, E2E, and UAT phases. Moving any test earlier in the process, on the other hand—shift-left design thinking—will reveal problems when they are less expensive to solve. Instead of waiting until integration testing, have developers demonstrate during the unit test phase that suspicious inputs will be rejected or that the user will only be allowed to execute approved activities.

Non-functional testing can also be added in the smoke test following UAT. Smoke tests often demonstrate that at least one person can log in (proving that authentication works), and they frequently look for excessive response times.

Testing Methodologies for Building: Types of Tests

The unit test stage centers on the smallest testable components and works in isolation. Later test phases (integration, E2E, and UAT) involve more components working together, and the range of tests that may be included grows as the number of components grows:

API testing: This ensures that an application can communicate with other apps.

Compatibility testing: This ensures that a program functions as expected on a set of platforms.

Stress tests demonstrate that an application will work as expected under predicted loads.

Security tests should reflect that only authenticated and authorized users may access system functionality (and only if their activities do not appear suspicious).

Each type of test shows something different about your application and has its own success criteria. Separate criteria for compatibility testing (including cross-browser testing) can be set for different test runs. What qualifies as "success" when your app runs on a device with a 19" screen may differ from what counts as "success" when your app runs on a device with a 4.5" screen, because you want your responsive app to change its UI by restricting functionality on the smaller screen.

During the integration, E2E, and UAT stages, exploratory testing will also be conducted. Exploratory testing enables individual testers (who may be developers, users, or QA) to study an application in order to find issues that were not previously identified (at least, not yet). Exploratory testing is the process of developing and running tests at the same time in order to find defects.

You'll decide which of these types of tests you require based on what you want to show about your application, what qualifies as "success" for each type of test, and how soon you'll utilize each type of test. API testing, for example, is frequently performed during the integration stage to show that two services can communicate with one another via web calls, queues, or events. However, API testing may be performed as early as the unit testing stage, allowing developers to construct an application with confidence that interfaces with other services will perform well.

Testing Methodologies for Building: Changing Audiences and Perspectives

Typically, only the developer is involved in the unit testing step. End users get more involved in validating tests as testing progresses to later phases (taking over completely at UAT). Users may get even more active in the integration, E2E, and UAT stages by using technologies such as test recorders and keyword testing. QA's position as dedicated managers of the testing process grows as tests get more complicated during various stages.

Because unit tests are frequently written by developers for their own code, they typically follow a white-box testing approach, leveraging the developer's understanding of the internal workings of components to drive test design. The purpose of white-box testing at the unit testing stage is to show that the component works as expected.

As one might anticipate, white-box testing contrasts with black-box testing, which dismisses how the code performs its function in order to focus on whether the component fulfills requirements. Both forms of testing are required. Using purely black-box testing, for example, might result in code that runs in ways you don't understand. When conditions change, such code will eventually (and unexpectedly) break.

There is a shift from white-box to black-box testing when testing progresses from unit testing to integration testing and the number of individuals participating grows. A white-box approach dictates that the components used for an integration test are chosen because they are known to function together in a certain way. However, validation in integration testing tends to focus on "Did we obtain the proper output?" (the assumption being that the correct solution could only be obtained if the components worked together "as intended")—a black-box approach.

Because E2E testing, UAT, and smoke tests are virtually totally black-box, the purpose of those phases is to show that the application fits the requirements (both functional and non-functional). In this case, having QA participating in requirement reviews pays dividends since it offers QA a strong sense of what constitutes a successful test at these phases.

Testing Methodologies for Deploying

End users with the power to sign off on the application's deployment participate in UAT. The purpose here is to demonstrate that the final stakeholder is happy with the modifications that are going to be implemented. QA and developers are engaged, but mostly in setting up the test environment and documenting any issues that arise.

Smoke testing may be completely automated and may not involve developers, end users, or QA—if an issue launching the program is found, the deployment can be reverted back immediately. However, as part of a smoke test, some teams have a user log in to the application, with a QA person or developer nearby to report any issues. Other teams do exploratory testing during the smoke test to look for post-deployment issues.

Manage the Testing Process

If you’re thinking that’s a lot of managing testing methodologies, and more than you have time or budget for … well, you’re probably right. You’ll need customize this process for your application. You’ll only want to apply tests where the risk warrants testing, and you’ll omit tests where the risk of failure is low (or where possible failures can be easily mitigated). You may know enough about the demand on your application and your IT infrastructure that stress testing doesn’t make sense, for instance.

If, on the other hand, you need to undertake more testing than your resources allow (or simply want to free up testing resources to focus on adding feature), your first step should be to check for duplication among your tests (to ensure that you don't have two tests that show the same thing). Can a test that utilizes only a single API be moved out of integration testing and into unit testing? Working in sprints also reduces the surface area that has to be tested.

You should also look for technologies and strategies that will lower your testing effort (while maintaining or improving test effectiveness) (without increasing the testing effort). Automating frequent and repeated tests (using the Cycle TestQuality's testing feature) not only enables regression testing, but also allows you to test in additional settings, increasing the effectiveness of your testing.

Sign Up for a Free Trial and add TestQuality to your workflow today!

Techniques for distributing workload are also worth investigating. Incorporating tools that allow users to write E2E tests, for example, not only automates additional tests and spreads the effort, but it also increases the efficacy of the tests.

Here's one more tip (and one more bit of terminology): Don't be concerned with the amount of lines of code (Coverage) run during testing unless you're aiming to decrease your code base by deleting unnecessary code. If your code passes all of your tests, then your code has passed all of your tests—you have proven everything about your application that you sought to prove. It is now ready for use.

Your QA specialists in testing and software tester team can increase Test Efficiency with TestQuality since any of your teams add new tests every week, and around twice a year, we find that the overall test duration has grown too long, so many of you add hardware resources for automated testing or employees for human and free-play testing.

TestQuality can simplify test case creation and organization, it offers a very competitive price but it is free when used with GitHub free repositories providing Rich and flexible reporting that can help you to visualize and understand where you and your dev or QA Team are at in your project's quality lifecycle. But also look for analytics that can help identify the quality and effectiveness of your test cases and testing efforts to ensure you're building and executing the most effective tests for your efforts.