The buzz around AI-generated code is undeniable. From intelligent autocompletion to entire function generation, AI is rapidly changing how we write software. Developers are experiencing unprecedented speed, freeing up time for more complex problem-solving. But if you're a QA professional or a developer with a quality hat, a familiar question quickly arises: "How do we actually ensure this AI-generated code is reliable, secure, and meets our standards?"

Scenario 1: The "Looks Good on Paper" Feature – Subtle Logic Errors

The Pain Point: An AI swiftly generates a complex new data validation function for your e-commerce platform. On initial inspection, the code looks clean, follows conventions, and even passes basic unit tests provided by the AI itself. However, when deployed, customers sporadically report incorrect shipping calculations or discounts not being applied in specific scenarios (e.g., combining two different coupon types). This isn't a syntax error; it's a subtle logical flaw the AI missed.

Why it's challenging: The AI optimized for common cases, but it lacked the deeper business context or experience to foresee edge cases. Manual code review might miss it because the logic is intricate and hard to trace. Without specific, scenario-based tests, these subtle bugs can slip into production, leading to customer dissatisfaction and costly hotfixes.

Scenario 2: The "Over-Optimized" Performance Boost – Unexpected Side Effects

The Pain Point: Your AI assistant is tasked with refactoring a legacy module to improve its performance. The AI delivers a highly optimized, concise piece of code that, on paper, should run faster. However, after integration, other modules that depend on this one start exhibiting strange behavior – data corruption in specific transactions, or an external API call timing out more frequently. The AI optimized for a singular goal (speed) without fully understanding the broader system's interdependencies and implicit contracts.

Why it's challenging: This isn't just about the new code; it's about its ripple effect. AI might not understand the entire system architecture or all implicit side effects. Without comprehensive integration and system-level tests that cover cross-module interactions and expected data flows, such issues are difficult to catch until they manifest as larger system failures.

Scenario 3: The "Quick Fix" That Breaks the Build – Integration Headaches

The Pain Point: A developer uses an AI to quickly generate a fix for a minor bug reported in a sprint. The AI generates the code, and a local test passes. The developer pushes the code. Suddenly, the CI/CD pipeline fails, not due to a bug in the new code itself, but because the AI's generation introduced a dependency conflict, an incompatible library version, or a new vulnerability that wasn't present before.

Scenario 4: The "Mystery Meat" Code – Understanding and Maintaining

The Pain Point: An AI generates a complex algorithm that works perfectly. However, weeks later, a new team member needs to modify it or debug an issue. They stare at the code, generated with advanced patterns and possibly obscure optimizations, and struggle to understand its intent or how it fits into the larger architecture. What started as a time-saver becomes a maintenance headache.

Why it's challenging: Readability, maintainability, and clear intent are crucial for long-term project health. AI-generated code can sometimes be functionally correct but hard for humans to reason about. Clear documentation, human-readable tests, and a structured approach to defining expectations become vital.

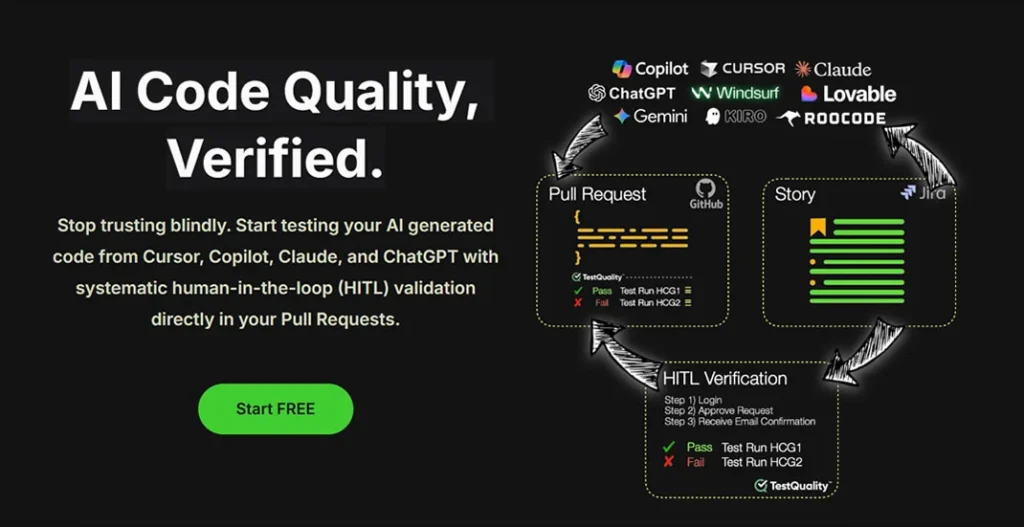

TestQuality: Your Navigator Through the AI Code Landscape

These scenarios underscore a critical need: the human element in quality assurance isn't going away; it's evolving. We need tools that empower QA professionals and developers to work smarter with AI, not just faster. This is where TestQuality becomes an invaluable partner.

Here’s how TestQuality helps you address these real-world challenges:

- Human-in-the-Loop Pull Request Verification: For every piece of AI-generated code, whether it's a new feature or a quick fix, TestQuality integrates directly into your GitHub, GitLab, or Bitbucket PR workflow. It provides a crystal-clear view of the test coverage and status for that specific code change. This means before any AI-generated code merges, human reviewers have a comprehensive dashboard showing passed, failed, or pending tests, immediately highlighting potential subtle logic errors (Scenario 1 & 3) or integration clashes.

- Gherkin & BDD for Unambiguous Requirements: To combat the "looks good on paper" and "mystery meat" issues (Scenario 1 & 4), TestQuality's robust support for Gherkin and Behavior-Driven Development (BDD) is crucial. You can define expected system behaviors in plain, human-readable language:

Feature: Customer Discount Application

As a customer

I want to apply multiple discount codes

So that I can get the best possible price.Scenario: Applying one percentage and one fixed amount discount Given I have "$100" worth of items in my cart And I apply a "10%" discount code When I apply a "$5" fixed amount discount code Then my total should be "$85"

These living specifications provide an unambiguous "contract" for what the AI-generated code must do. When the AI delivers code, your BDD tests in TestQuality act as the ultimate validator, ensuring the AI understood the intent and not just the syntax. This also makes the "mystery meat" code far more comprehensible, as its behavior is clearly documented by the tests themselves. - Comprehensive Test Management & Traceability: For complex scenarios like unexpected side effects (Scenario 2) or integration failures (Scenario 3), you need a holistic view. TestQuality allows you to:

- Link test cases directly to requirements and user stories, providing full traceability.

- Manage various types of tests – unit, integration, system, performance – and track their execution status centrally.

- Generate insightful reports that highlight test coverage gaps, allowing you to quickly identify areas where AI-generated code might be under-tested or causing regressions in interconnected modules.

TestQuality's integration with GitHub PR ensures that every PR is thoroughly tested before being merged into the main branch.

By integrating this level of granular, human-led testing directly into your Pull Request workflow, TestQuality empowers your team to confidently leverage the speed of AI while eliminating the risks of blind trust.

The shift to AI-assisted code generation is profound, but it doesn't mean sacrificing quality. Instead, it demands a more intelligent, adaptable approach to testing. By embedding robust verification processes and leveraging powerful test management tools like TestQuality, QA professionals and developers can confidently harness the speed of AI while ensuring the integrity and reliability of their software.

Ready to systematically validate your AI-generated code and elevate your project's quality to 'Verified'?

Discover how TestQuality integrates exclusive human-in-the-loop validation directly into your GitHub Pull Requests, providing the tools you need for comprehensive PR testing. Stop trusting blindly. Start verifying.

Start Free with TestQuality today and build truly high-quality software.